Abstract

Conventional methods for testing independence between two Gaussian vectors require sample sizes greater than the number of variables in each vector. Therefore, adjustments are needed for the high-dimensional situation, where the sample size is smaller than the number of variables in at least one of the compared vectors. It is critical to emphasize that the methods available in the literature are unable to control the Type I error probability under the nominal level. This fact is evidenced through an intensive simulation study presented in this paper. To cover this lack, we introduce a valid randomized test based on the Kronecker delta covariance matrices estimator. As an empirical application, based on a sample of companies listed on the stock exchange of Brazil, we test the independence between returns of stocks of different sectors in the COVID-19 pandemic context.

Similar content being viewed by others

1 Introduction

The likelihood ratio test approach has been widely considered in testing the independence between two groups of random variables under the assumption of normality. And, the traditional likelihood ratio test approach assumes that the dimension of the data is a fixed small constant comparing with the sample size. However, this assumption may not be true in this big data era when the number of measured variables is usually large. To list a few, some modern data sets coming from climate change data, stock market data in finance and micro-array data in biology are often high-dimensional data. For more on high-dimensional data examples, the works of Donoho (2000), Johnstone (2001), Johnstone (2006) and Fan and Li (2006) are indicated.

The problem of testing independence in high-dimensional data has received considerable interest even in the most recent literature. The work of Han and H. (2017) investigated testing the mutually independence among all entries of a P-dimensional random vector based on N independent observations, where P and N both grow and P/N can diverge or converge to any non-negative value. They focused on nonparametric rank-based tests and their optimality, including Kendall’s tau ( Kendall (1938)) and Spearman’s rho ( Spearman (1904)). To check the total independence of a random vector without Gaussian assumption in high dimensions, a test based on the sum of squares of pairwise Kendall’s taus was developed by Mao (2018). They showed that the test can be justified when both the dimension and the sample size go to infinity simultaneously and applied to HDLSS (high dimension, low sample size) data. Testing independence among a number of high-dimensional random samples can be found at Chen and Liu (2018). They arranged N samples of a P-dimensional random vector into a \(P \times N\) data matrix, and tested the independence among the columns with the assumption that the data matrix follows a matrix-variate normal distribution. They studied the effect of correlated samples when using multiple testing of Pearson’s correlation coefficients and showed the need for conducting sample independence test. Székely and Rizzo (2013) developed a distance correlation t-test (Dcor) for testing the independence of random vectors in high dimension, where the dimensions are arbitrarily high and not necessarily equal. Yao et al. (2018) introduced an \(\ell _2\)-type test for testing mutual independence for high-dimensional data, where they constructed the test statistic on the basis of pairwise distance covariance. And their proposed test captured non-linear and non-monotone dependence. The limiting null distribution of the test statistic was shown to be standard normal distribution. Yang and Pan (2015) proposed a new test statistic for testing independence between two high-dimensional random vectors. Their test statistic is based on the sum of regularized sample canonical correlation coefficients of the two vectors. These recent work can be summarized into three main categories: test the independence among entries within one vector; test the independence of observations from one vector; and test the independence of two or more vectors.

In this paper, we focus on testing independence between the components of two multivariate Gaussian vectors, under high dimension settings. We state the motivations and problem formulations in the following sequel.

Consider the random row vectors \(\mathbf {X}=(X_1, \ldots , X_{P_1})\) and \(\mathbf {Y}=(Y_1, \ldots , Y_{P_2})\), where the transposes \(\mathbf {X}^{\prime }\) and \(\mathbf {Y}^{\prime }\) are multivariate Gaussian vectors with dimensions \(P_1\) and \(P_2\), respectively, that is, \(\mathbf {X}^{\prime } \sim N_{P_1}({\varvec{\upmu }}_1,\varSigma _1)\) and \(\mathbf {Y}^{\prime } \sim N_{P_2}({\varvec{\upmu }}_2,\varSigma _2)\). Also, assume that the mean vectors \({\varvec{\upmu }}_1\), \({\varvec{\upmu }}_2\) and the variance matrices \(\varSigma _1\) and \(\varSigma _2\) are unknown and not necessarily equal.

Let \(\mathbf{X }=(\mathbf {X}^{\prime }_1, \ldots ,\mathbf {X}^{\prime }_{N})^{\prime }\) and \(\mathbf{Y }=(\mathbf {Y}^{\prime }_1, \ldots ,\mathbf {Y}^{\prime }_{N})^{\prime }\) denote the matrices with random samples taken from the distributions of \(\mathbf {X}^{\prime }\) and \(\mathbf {Y}^{\prime }\), respectively. It is of great importance to test independence between \(\mathbf {X}\) and \(\mathbf {Y}\) in a high dimension setting, where the dimensions \(P_1\) and \(P_2\) are much larger than the number of obtained observations, N. More specifically, our goal is to test if \(X_i\) and \(Y_j\) are independent for each pair of \(i \in \{1,\ldots ,P_1\}\) and \(j \in \{1,\ldots ,P_2\}\). Let \(\varSigma _{X,Y}=cov(\mathbf {X}^{\prime },\mathbf {Y}^{\prime })\) denote the covariance between \(\mathbf {X}\) and \(\mathbf {Y}\). We want to test:

where \(\mathbf{0 }\) is a \((P_1\times P_2)\) dimensional matrix of zeros. Note that, since the hypotheses are only on \(\varSigma _{X,Y}\), the covariance matrices \(\varSigma _1\) and \(\varSigma _2\) are nuisance parameters in this context.

It can be shown from Timm (2002) that, if \(P_1 \le N\) and \(P_2 \le N\), then one can use the likelihood ratio test statistic, \(\lambda (\mathbf{X },\mathbf{Y })\), for testing (1) based on the asymptotic chi-squared distribution of \(-2\log \lambda (\mathbf{X },\mathbf{Y })\):

where the notation |.| represents the absolute value of the determinant of a matrix, \(S_{\mathbf{X }}\) is the \(P_1 \times P_1\) sample covariance of \(\mathbf {X}\) based on the data \(\mathbf{X }\), \({\hat{\rho }}_{i,j}\) is the maximum likelihood estimator of the correlation between \(X_i\) and \(Y_j\), and \(S_{\mathbf{Y }}\) is the \(P_2 \times P_2\) sample covariance of \(\mathbf {Y}\) based on the data \(\mathbf{Y }\), that is:

with \({\bar{X}}=\frac{1}{N} \sum _{i=1}^{N} \mathbf {X_{i}};\)

and

with \({\bar{Y}}=\frac{1}{N} \sum _{j=1}^{N} \mathbf {Y_{j}}.\)

Finally, \(S(\mathbf{X },\mathbf{Y })\) is a \((P_1+P_2)\times (P_1+P_2)\)-dimensional matrix that combines the sample covariances in the following way:

where \(S_{\mathbf{X },\mathbf{Y }}\) is the sample covariance between \(\mathbf{X }\) and \(\mathbf{Y }\).

Note that \(S_{\mathbf{X }}\) is singular when \(P_1 > N\). Likewise, \(S_{\mathbf{Y }}\) is singular when \(P_2 > N\). Under such a high-dimensional situation, that is, when \(P_1 > N\) or \(P_2 > N\), the arguments for deriving the asymptotic chi-squared distribution of \(-2\log \lambda (\mathbf{X },\mathbf{Y })\) are no longer valid. Moreover, according to Bai and Zheng (2009), when the dimensions \(P_1\) and \(P_2\) are large, not necessarily larger than the number of observations, the traditional likelihood ratio test increases dramatically the size of the test.

Recently, Bai and Zheng (2009) proposed corrections for likelihood ratio tests to cope with high-dimensional effects when testing for a high-dimensional vector which follows normal distribution \(N_{p}({\varvec{\upmu }},\varSigma )\) with \(H_{0}:\varSigma =\mathbf{I }_p \text{ vs. } H_{1}:\varSigma \ne \mathbf{I }_p\), using central limit theorems for linear spectral statistics of sample covariance matrices and of random F-matrices. Their dimension p and sample size n go to infinity and \(\displaystyle {\lim _{n \rightarrow \infty }} \frac{p}{n}=y\in (0,1).\) In 2012, Jiang and Yang (2012) further extended the likelihood ratio test for covariance matrices of high-dimensional normal distributions to include the case when \(y=1\), using Selberg integral. Then, Jiang and Yang (2013) extended the likelihood ratio approach to the case of high-dimensional data with \(y\in (0,1]\) and the proposed likelihood ratio test statistics converges in distribution to normal distributions with explicit means and variances. Moreover, Jiang and Zheng (2013) proposed the corrected likelihood ratio test and large-dimensional trace criterion to test the independence of two large sets of multivariate variables. And their trace criterion can be used in the case of \(p > n\), while the corrected likelihood ratio test is unfeasible due to the loss of definition. However, although a number of literature focuses on corrections or extensions of likelihood ratio test for high-dimensional independence test when \(y\in (0,1]\), to our knowledge the case when \(y>1\) is new in the literature.

For the situations when \(N<P\) or even \(N<<P\), an empirical distance approach was used by Yamada and Nishiyama (2017) to test for a block diagonal covariance matrix in non-normal high-dimensional data. A test statistic based on the extended cross-data-matrix methodology (Ecdm) was developed by Yata and Aoshima (2013), and later extended by Yata and Aoshima (2016), for testing of the correlation matrix between two subsets of a high-dimensional random vector, where the Ecdm estimator was proved to be consistent and asymptotically Normal in high-dimensional settings.

The present work introduces a randomized test procedure for testing (1). The method works for unstructured covariance matrices, which is possible using the slicing procedure (Akdemir and Gupta 2011) and the Flip-flop algorithm (Srivastava et al 2008). The dimensions, \(P_1\) and \(P_2\), of the two vectors are allowed to be larger than the number of obtained observations, N. By means of an intensive Monte Carlo simulation study, we show that the proposed method ensures a true control of the Type I Error probability under the nominal level, while performing similarly to competing methods in terms of statistical power. This is a relevant advantage since competing methods presented inflated Type I Error probabilities in all of the simulated scenarios.

The remainder of this paper is organized as follows. In Sect. 2, we explain the flip-flop algorithm to obtain the estimator of a Gaussian covariance matrix. In Sect. 3, we develop a new test statistic based on flip-flop Kronecker delta covariance estimator which is similar to a conventional likelihood ratio test statistic but is applicable for high-dimensional data. We also describe the decision rule of a randomized test associated with the new test statistic. A detailed evaluation of the newly developed test, along with a comparison with two prominent methods, is given in Sect. 4. In Sect. 5, we apply our method to a sample of companies listed on the stock exchange to investigate if daily returns, among six sectors, are independent in a short-run time during the COVID-19 pandemic. Section 6 summarizes the main points.

2 Flip-flop estimator of Gaussian covariance matrices: an overview

The work of Silva and Liu (2018) introduced the mat matrix operator, which is an important tool to construct the method introduced in this paper.

Definition 2.1

(mat operator) Let \(p\in {\mathbb {N}} \) and \(q\in {\mathbb {N}} \) be such that \(p\times q=P\), and let A denote a \((1\times P)\)-dimensional matrix. The mat operator applied to A, denoted by \(mat_{p,q}(A)\), is a \((p\times q)\)-dimensional matrix obtained by slicing A into p vectors, each of q-dimension, and then stacking these q-dimensional vectors as row vectors. That is, if \(a_{j}\) denotes the jth entry of A, then:

where \(A_{l:t}=(a_{l},a_{(l+1)},\ldots ,a_{t})\).

Let \(\mathbf {W}_1, \ldots , \mathbf {W}_{N}\) denote a sequence of independent \(1\times P\)-dimensional vectors, where \(\mathbf {W}_j^{\prime }=(W_{1,j},\ldots ,W_{P,j})^{\prime } \sim N_{P}({\varvec{\upmu }},\varSigma ),\) and \(W_{i,j}\sim N(\mu _i,\sigma _{i}^{2})\), for each \(j=1,\ldots ,N\) and \(i=1,\ldots ,P\).

An unbiased estimator for \(\varSigma \), based on the sample

is

where \({\bar{W}}=\sum _{j=1}^{N}\mathbf {W}_j/N\). But, if \(N < P\), then \({{{\mathbf {S}}}}\) is singular. An alternative estimator has been proposed by Akdemir and Gupta (2011). The idea is to rewrite \(\varSigma \) in terms of the Kronecker delta covariance structure as:

where \(\varOmega \) and \(\varPsi \) denote two matrices of dimensions \(p\times p\) and \(q\times q\), respectively, and \(P=p\times q\). According to Silva and Liu (2018), the choice of p and q, which has to be restricted to minimization of \(p(p+1)/2+q(q+1)/2\), is such that \(N\le \max \{p,q\}\). They also suggest to use \(p<q\), which then ensures that any choice \(Np>q\) will provide non-singular estimates for \({{\varOmega }}\) and \({{\varPsi }}\).

To estimate \({{\varOmega }}\) and \({{\varPsi }}\), one can use the flip-flop algorithm introduced by Srivastava et al. (2008). The first step of flip-flop is based on slicing each of the P-dimensional row vectors \(\mathbf {W}_{j}\) in a way to obtain the \(p\times q\) random matrix, \(W^{*}_{j}\), in the following way:

It is also necessary to calculate \({\bar{W}}^{*}=\sum _{j=1}^{N}W^{*}_j/N\).

Set a counting variable to start with zero, say \(c=0\). The initial matrix of the iterative procedure is \({\hat{\varOmega }}_0(W)=\mathbf{I }_p\), where \(\mathbf{I }_p\) is the p-dimensional identity matrix. Let \({\hat{\varSigma }}^{(k)}_{\otimes }(W)\) denote the interim estimator of \(\varSigma \) at the cth iteration. While

make

where tr(A) is the trace of a matrix A, and \(\epsilon \) is a tolerance parameter for the discrepancy between the unbiased estimator, \({{\mathbf {S}}}\), and \({\hat{\varSigma }}_{\otimes }\).

Let \(c^*\) denote the first value of c for which (6) does not hold. This way, a non-singular estimator for the inverse of \(\varSigma ^{-1}\) is given by:

According to Theorem 2 by Tsiligkaridis et al. (2013), the flip-flop algorithm achieves high-dimensional consistency, that is:

when \(P\rightarrow \infty \) or \(N\rightarrow \infty \). Tsiligkaridis et al. (2013) also presented an exhaustive simulation study supporting the theoretical consistency of \({\hat{\varSigma }}^{-1}\).

It can happen that P is a prime number. Hence, in order to ensure feasibility of the estimator for any value of P, in the present paper we consider the transformation:

where \(Z_j \sim N(0,1)\). This way, one can always run \({\hat{\varSigma }}^{-1}_{\otimes }(\mathbf{V })\), with \(\mathbf{V }=({\tilde{V}}^{\prime }_1,\ldots ,{\tilde{V}}^{\prime }_N)^{\prime }\), to estimate the covariance structure in W since it will be embedded in the covariance of \(\mathbf{V }\).

3 Method

For testing (1) given the observations \(\mathbf{X }=(\mathbf {X}^{\prime }_1,\ldots ,\mathbf {X}^{\prime }_{N})^{\prime }\), \(\mathbf{Y }=(\mathbf {Y}^{\prime }_1,\ldots ,\mathbf {Y}^{\prime }_{N})^{\prime }\), and \(\mathbf{U }=\left( \mathbf{X },\mathbf{Y }\right) \), we propose the following test statistic:

where \({\hat{\sigma }}_{i,j}(\mathbf{U })\) is the component in row i and column j of the \((P_1+P_2)\times (P_1+P_2)\)-dimensional flip-flop Kronecker delta covariance estimator \({\hat{\varSigma }}_{\otimes }(\mathbf{U })\) solved from (9). This test statistic is basically the sum of the squared estimators of the correlations between X and \(\mathbf{Y }\), which is inspired on the likelihood ratio statistic in (2), where the information of the sample variances of X and Y are weighted by the information in the sample covariance between them.

Under the null hypothesis, the distribution of \(T(\mathbf{X },\mathbf{Y })\) is invariant with respect to the collection order of the sample units in \(\mathbf{X }\) and \(\mathbf{Y }\), that is, the sample units are exchangeable under \(H_0\). Therefore, each new configuration of the actually observed data order generates a new observation of \(T(\mathbf{X },\mathbf{Y })\) under \(H_0\). Thus, a permutation test is applicable.

Let \(\tau _1=(n_{1,1},\;n_{1,2},\ldots ,\;n_{1,N})\) denote the first set formed by N numbers randomly sampled, without replacement, from the set \(\eta =\left\{ 1,\;2,\ldots ,\; N\right\} \). This way, a first randomized form of \(\mathbf{X }\), say \(\mathbf{X }_1\), has the first row formed by the \(n_{1,1}\)th line of \(\mathbf{X }\), the second line has the \(n_{1,2}\)th line of \(\mathbf{X }\), and so on until the last line of \(\mathbf{X }_1\) which has the \(n_{1,N}\)th line of \(\mathbf{X }\). With this, \(T_1\) shall denote the result of \(T(\mathbf{X }_1,\mathbf{Y })\). Similarly, a second set \(\tau _2=(n_{2,1},\;n_{2,2},\ldots ,\;n_{2,N})\) of N numbers sampled without replacement from \(\eta \) has the indexes for forming \(\mathbf{X }_2\) from \(\mathbf{X }\), which gives \(T_2=T(\mathbf{X }_2,\mathbf{Y })\). Consider to repeat this procedure \((m-1)\) times, that is, until forming \(T_1,\ldots ,T_{m-1}\), a sequence of observations of \(T(\mathbf{X },\mathbf{Y })\) obtained from independent randomized configurations of \(\mathbf{X }\).

For \(T(\mathbf{X },\mathbf{Y })=t_0\) observed with the actual order of collection, define:

This way, the permutation p-value is given by:

Therefore, given an arbitrary tolerance (level) \(\alpha \in (0,1)\), \(H_0\) is rejected if:

3.1 Validity of the permutation p-value

The permutation test as well as the Monte Carlo test produce ‘valid’ test criterion (Fisher 1935; Pitman 1937; Dwass 1957; Barnard 1963; Hope 1968; Birnbaum 1974). A test criterion is said valid if the related p-value, say \(P(\mathbf{X })\), is such that

for each \(\alpha \in (0,1)\). To see this, let S denote the support of \({\hat{p}}\), that is:

and let \(T_{(i)}\) denote the ith observation in the ordered sequence of \(T_{i's}\). If the point \(g/m\in S\) is a realization of \({\hat{p}}\), it means that the number of simulated \(T_{i's}\) greater than or equal to \(t_0\) is equal to \(g-1\). This is equivalent to observing the event \(\left\{ T_{(m-g)}< t_0\le T_{(m-g+1)}\right\} \) for \(g=2,\ldots ,m-1\), \(\left\{ T_{(m-1)}< t_0\right\} \) for \(g=1\), and \(\left\{ t_0\le T_{(1)}\right\} \) for \(g=m\). Note that, under \(H_0\), the sample units are exchangeable, then the points of the set \(\left\{ T_{(m-g)}< t_0\le T_{(m-g+1)},\; g=2,\ldots ,m-1\right\} \cup \left\{ T_{(m-1)}< t_0\right\} \cup \left\{ t_0\le T_{(1)}\right\} \) are equiprobable under \(H_0\), then

Therefore, for \(r=\left\lfloor \alpha m\right\rfloor \), we conclude that \({\hat{p}}\) is valid because

Besides, irrespectively to the validity of \(H_0\), \({\hat{p}}\rightarrow p\) almost everywhere as \(N\rightarrow \infty \) and \(m\rightarrow \infty \), where p is the exact p-value. For a given \(t_0\),

as m goes to infinity, where \(F_{N}(.)\) is the cumulative probability function of \(T(\mathbf{X },\mathbf{Y })\) for a fixed sample size N. Finally, by the law of large numbers, we have

as \(N\rightarrow \infty \).

Further properties and extensions of the permutation and Monte Carlo tests, including their sequential versions, are derived by Silva and Assunção (2018).

3.2 Speeding up run-time

In order to save execution time, in this paper we use a sequential version of the permutation test proposed by Silva and Assunção (2009). Now, the number of configurations needed to calculate the p-value, say L, is a random variable defined as the first time when there are h simulated values greater than or equal to \(t_0\). If this has not occurred before step \((m-1)\), then the randomization is stopped at step \((m-1)\), that is, L is equal to (m-1). As demonstrated by Silva and Assunção (2009), the p-value followed by such sequential randomized design is given by:

4 Type I error probability and statistical power: a simulation study

A simulation study was conducted in order to compare the Type I Error probability and the statistical power of Ft (our method newly developed in this paper) with the two prominent methods proposed by Yata and Aoshima (2016) (Ecdm) and by Székely and Rizzo (2013) (Dcor).

4.1 Tuning parameters scenarios

Under significance levels of \(\alpha =0.001,\; 0.01,\; 0.05\), and inspired in the setting used by Yao et al. (2018), we considered a block-diagonal structure for the correlations between the entries \(X_i\) and \(Y_j\): \(cor(X_i, Y_j)= \rho \), with \(0\le |i-j|<P\), \(\rho =0,\; 0.1,\; 0.25,\; 0.5\), and \(var(X_i)=var(Y_j)=1\) for each \(i,\; j \in \{1,\ldots , P\}\).

In addition, we fixed the intra-class covariances \(\varSigma _1=\varSigma _2\), for which we considered three covariance structures also inspired in the setting used by Yao et al. (2018):

(\(i^{\prime }\)) The block-diagonal structure: \(cov(X_i, X_j)=cov(Y_i, Y_j)= r\) with \(0<|i-j|<P\), and \(r=0.1,\; 0.5\);

(\(ii^{\prime }\)) The AR(1) structure: \(cov(X_i, X_j)=cov(Y_i, Y_j)=r^{|i-j|}\) with \(0<|i-j|<P\), and \(r=0.1,\; 0.5\);

(\(iii^{\prime }\)) The band structure: for \(i,j \in \{1,\ldots ,P\}\), \(cov(X_i, X_j)=cov(Y_i, Y_j)=r^{|i-j|}\) when \(0<|i-j|<3\), \(r=0.1,\; 0.5\), and \(cov(X_i, X_j)=cov(Y_i, Y_j)=0\) when \(|i-j|\ge 3\).

Finally, for \(P=50,\; 80,\; 100,\; 150,\; 200\), the sample sizes were chosen according to the rule \(N=P/s\) among three options, small, moderate, and large related to \(s=10\), \(s=5\), and \(s=1\), respectively.

4.2 Simulation study results

For the scenario of high correlation (\(\rho =0.5\)), the three methods presented statistical power estimates very close to 1 even under the small dimension case (\(P=50\)). Thus, descriptions, graphs and tables in this section are concentrated on the scenarios of absence, small and intermediary correlation cases (\(\rho =0,\; 0.1,\; 0.25\)).

Tables 1, 2, and 3 present the estimated Type I Error probabilities (\(\rho =0\)) of Ft, Ecdm and Dcor based on the block-diagonal \((i^{\prime })\), the AR(1) \((ii^{\prime })\), and the band \((iii^{\prime })\) structures for the intra-class covariances, respectively, all under \(r=0.5\). The calculations for the AR(1) structure \((ii^{\prime })\) under \(r=0.1\) are shown in Table 6 of the Appendix. This is shown for \(\alpha =0.01\) and \(\alpha =0.05\). It is clear that the test sizes of Ecdm and Dcor are not controlled under the nominal level for the block-diagonal structure \((i^{\prime })\), and actually they are quite liberal even for large dimensions such as \(P=200\). Moreover, for Dcor, such inflation on the Type I Error probability seems to increase with the ratio \(s=P/N\), which is a serious limitation in high dimension problems, where the number of variables is usually far bigger than the sample size. This is not a limitation of Ft because it guarantees a \(\alpha \)-level test by theoretical construction, which is corroborated with the Type I Error probabilities estimates provided in all the evaluated scenarios. For structures \((ii^{\prime })\) and \((iii^{\prime })\), Dcor does preserves the Type I Error probability under the \(\alpha \) level, while Ecdm does not.

Regarding the statistical power estimates, it is important to ensure calculations under the same \(\alpha \)-level from all methods for a fair comparison. Hence, the comparisons Ft vs Ecdm and Ft vs Dcor must be performed separately since Ecdm and Dcor showed different test sizes in all scenarios. Table 4 has the power estimates for the comparison Ft vs Ecdm, and Table 5 has the power estimates for the comparison Ft vs Dcor. The power magnitudes are similar between the two methods in each table in almost all of the evaluated scenarios.

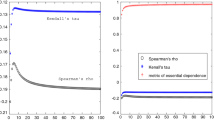

Note from Table 5 that the power is close to 1 for large values of P. For evaluating the performance of the three methods under smaller power magnitudes, consider to take a very small tolerance on the Type I Error probability. For this, Fig. 1 in the Appendix shows the Type I Error probability estimates under \(\alpha =0.001\). This figure highlights again that Ecdm and Dcor do not control the test size under the nominal level, while Ft has uniformly presented test size estimates very close to the nominal \(\alpha =0.001\). Moreover, Ft performs very similarly to Ecdm and Dcor in terms of statistical power, as illustrated with Fig. 2 in the Appendix.

Now, consider to compare these methods under the small correlation case (\(\rho =0.1\)). For this, Fig. 3 in the Appendix shows the power estimates under \(\alpha =0.01\). The power magnitudes are smaller than 0.3 for the situations with \(s=5\) and \(s=10\). As already evidenced with all the scenarios used in these simulation study, Ft performs very similar to Ecdm and Dcor in terms of statistical power.

5 Independence between Brazilian economic sectors: the COVID-19 period

The empirical application in this section follows the same direction of other studies ( Bao and Zhou (2017); Székely and Rizzo (2013) and Yang and Pan (2015)), analyzing the independence between different sectors through daily returns of stocks. However, this example is applied to a sample of companies listed on the stock exchange of an emerging country, the B3 S. A. Brasil Bolsa Balcão (B3), in the COVID-19 pandemic context. This is important since the dependence between the considered sectors may differ from the pre-existing relation before the shock caused by COVID-19.

In 2017, B3 was the fifth-largest capital and financial market in the world and the country’s GDP was among the top 10. Currently, projections for Brazilian GDP are discouraging. According to the World Bank’s June report Group (2020), it is estimated a plummet around 8.0% of economic activity in Brazil in the year of 2020 (owing to mitigation measures, plunging investment, and soft global commodity prices), above the expected average global economy drop of 5.2%. The proposal is to investigate the common interest in testing if different sectors are independent in a period when economic activities were classified according to their essentiality by the Brazilian government.

The first Covid-19 case in Brazil appeared in the end of February (26th), but the country started to monitor suspect cases since February 21thFootnote 1. Different from other countries (in Europe, North America, and Asia) Brazil had time for preparing its political strategy to control the virus. Even so, the Brazilian financial market was already reacting to the effects of having to close the international trade and to the variations of the financial markets. In the studied period, many other facts impacted the Brazilian market, like the deadlock between Saudi Arabia and Russia about Oil production, and the political crisis in the Brazilian government.

In March, B3 had a circuit breaker four times, in the 9th, 11th, 12th and 16th. On March 9th, oil prices fell sharply with a price war between Saudi Arabia and Russia. The oil companies listed, and with significant participation in the stock index in Brazil, influenced the circuit breaker. On days 11th and 12th, the effect was a “rebound” from the frustrated high expectation of investors from the previous day (10th) and the stock markets falling because of the US government’s apathy until then. Furthermore, the World Health Organization (WHO) declared that the Coronavirus pandemic was in place. Finally, on March 16th, the measures announced by the Federal Reserve (FED) and other central banks were frustrated (monetary authorities cutting aggressively interest rates). Moreover, the fear about the pandemic impacts on the economy increased. For instance, considering the stock of the Brazilian index (Ibovespa), airlines and travel companies such as AZUL “GOL”, CVC Brasi, among others, were the most affected in the period.

The Brazilian Government is facing COVID-19 crisis and many political problems. In April, the Minister of Justice left his position reporting problems in the administration of the Brazilian President. From February to April, five ministers of the current government were exonerated from the positions of Justice, Education, Citizenship, Regional Development, and Health. In addition, there had been a conflict between the stance and policies adopted by mayors and state governors with the Brazilian president.

On March 17th, the first death of the COVID-19 was registered in Brazil, and on the 20th the government issued a decree, No. 10,282, which regulates Law No. 13,979, of February 6th, 2020, to define public services and essential activities during the health crisis. Among the activities comprised are security, transportation, communication, energy services, food and hygiene items production, banking and postal services, and fuel production and sale. Over the months this list was altered. In May, it had already been extended to more than 50 items, including industry, construction and some beauty and health services. Despite the guidelines by the federal government decree, the states and municipalities still had autonomy to set their own isolation rules. This led to an heterogeneity on the impact of the measures adopted in different regions of the country.

Since there are many different factors in the short period under investigation, a high dimension test is necessary. It is important to conduct tests in the short term for investors to better allocate/diversify their resources. This is of great importance on an unprecedented crisis, in which there is a relative mismatch between the different sectors of the economy, some of them non-essential, closed for some time, while others remain in full operation. In addition, it is a scenario with a large number of companies closing, and with the rising of unemployment in BrazilFootnote 2.

The original data used here are the historical returns, calculated using the adjusted closing prices of 154 stocks (from 6 different sectors) for the trading days of February, March and April of 2020, the first three months of the pandemic in Brazil. The dataset is derived from Yahoo Finance, the numbers of the stocks (dimensions, P) in each sector and trading days (observations, N) are shown in Table 7 of the Appendix part.

For supporting the multivariate normal distribution assumption, we have applied Box-Cox transformations to each sectorial vector, Box and Cox (1964)Footnote 3. The classification sector codes can be obtained on the B3 websiteFootnote 4.

The civil construction sub-sector was considered a sector and was analyzed separately from the cyclical consumption sector. In addition, the fabric, clothing, and footwear sub-sector was removed from cyclical consumption in the analysis. The small number of trading days for the second half of February are due to the Carnival holiday in Brazil. Only shares that had some liquidity in the Brazilian market were used. Each sector consists of a number of stocks, which can be treated as a vector (as in Table 7). Our goal is to check the independence among the vectors using our test method, Ft, on a total of 15 pairs of sectors (Tables 8, 9, 10, 11,12, and 13 in the Appendix).

The sector of Non-Cyclical Consumer stands out as it is more independent with all of the other sectors. This sector includes processed food companies and agricultural companies, that were less impacted by the crisis for not stopping operations. In contrast, the cyclical consumption sector proved to be more often dependent with other sectors in the period studied. A possible explanation is that it contains companies linked to the non-essential group, that had to stop operating for a while (or had to operate on a smaller scale, remotely). This sector also has companies that were directly impacted by the crisis, such as travel companies like “CVC Brasil”, fitness centers “SMART FIT”, car rentals, “LOCALIZA”, and commerce such as “LOJAS RENNER”, “AREZZO”, “MAGZ LUIZA”, among others.

According to our results, more pairs of sectors are independent in the 1-15th and 16-29th days of February. On the other hand, more sectors are dependent on 1-15th days of March, and in the last days of April. This suggests a different behavior in the Brazilian market during the pandemic COVID-19, which demonstrates the importance of evaluating such statistics in the short term. The causes for such short-term effects may be related to what was already mentioned, instability in international markets, oil prices, the evolution of the coronavirus, the blockade of non-essentials sectors, and the Brazilian political instability, which coincides with the dates of less independence between sectors of the Brazilian economy.

6 Discussion

The likelihood ratio test is widely used for the problem of testing the independence between two multivariate Gaussian vectors. However, under a high dimension situation, the conventional likelihood ratio test statistic is no longer computable. The method presented in this paper has demonstrated its capability for testing independence among the components of two multivariate Gaussian vectors in high dimensions and is an appealing method from several aspects. Firstly, the test criterion is valid, which was shown through both analytical and numerical arguments, that is, Ft controls the size of the test under the nominal level. In contrast, the test size of the two prominent methods, Ecdm and Dcor, turns out to be much higher than the nominal levels depending on the covariance structures of X and Y. Secondly, when comparing the power among three methods under small dimension cases and high dimension cases, it is clear that Ft performs very similarly to Ecdm and Dcor. Thirdly, our method works for unstructured covariance matrices due to the fact that our test statistic is based on the elements of a flip-flop Kronecker delta covariance estimator.

As a disadvantage of the proposed method, to apply Ft one needs to suppose that the Kronecker delta structure in (4) holds. For the cases where \(N>P\), one can use the test by Srivastava et al. (2008). But, for the best of our knowledge, for the case where \(N\le P\) such a type of test is not available in the literature yet. The construction of a test of Kronecker delta structure, or a class of transformations on the data in order to reach such a structure, are important topics for future investigations.

Another important point regards the implications of changing the ordering of the variables for the slicing method. As demonstrated by Tsiligkaridis et al. (2013), the flip-flop algorithm achieves high-dimensional consistency irrespectively to how the components of X and Y are arranged.

For applications where P is prime, the proposed procedure involves coupling one independent normal variable to run the slicing method. We performed an additional simulation study considering \(P=49,\; 81,\; 99,\; 153,\; 201\), which are not prime numbers. This way, we were able to compare the performance of the situations with P variables with the one with \((P+1)\) variables. The power magnitudes were the same in all scenarios (results not shown here), that is, adding one independent Gaussian variable in the P-variate vector does not affect the performance of the proposed test. The results were consistently equal to those with \(P= 50,\; 80,\; 100,\; 150,\; 200\).

Finally, it merits to remark that Ft was constructed for multivariate Gaussian data, where zero linear correlation implies independence. In more general applications, where normality may fail, Dcor is a proper tool as it captures dependencies between variables other than the linear correlation.

Notes

The Coronavirus Timeline in Brazil is found on https://www.sanarmed.com/linha-do-tempo-do-coronavirus-no-brasil

They were performed in Software R, using the “car” library and the “powerTransform” and “bcPower” functions.

References

Akdemir D, Gupta A (2011) Array variate random variables with multiway Kronecker delta covariance matrix structure. J Algebra Stat 2:98–113

Bai JDYJFZ, Zheng S (2009) Corrections to LRT on large dimensional covariance matrix by RMT. Ann Stat Biom 37:3822–3840

Bao HJPGZ, Zhou W (2017) Test of independence for high-dimensional random vectors based on freeness in block correlation matrices. Electron J Stat 11:1527–1548

Barnard G (1963) Discussion of professor Bartlett’s paper. J R Stat Soc 25B:294

Birnbaum Z (1974) Reliability and Biometry, SIAM, Philadelphia, chap Computers and unconventional test-statistics, pp 441–458

Box GEP, Cox DR (1964) An analysis of transformations. J R Stat Soc B 26(2):211–252

Chen X, Liu W (2018) Testing independence with high-dimensional correlated samples. Ann Stat 46:866–894

Donoho DL (2000) High-dimensional data analysis: The curses and blessings of dimensionality. Aide-Memoire of the lecture in AMS conference Math challenges of 21st Century

Dwass M (1957) Modified randomization tests for nonparametric hypotheses. Ann Math Stat 28:181–187

Fan J, Li R (2006) Statistical challenges with high dimensionality: Feature selection in knowledge discovery. Proceedings of the Madrid International Congress of Mathematicians

Fisher R (1935) The design of experiments. Hafner, New York

Group WB (2020) Global economic prospects, June 2020: pandemic, recession: the global economy in crisis. World Bank Publications

Han CSF, Liu H (2017) Distribution-free tests of independence in high dimensions. Biometrika 104:813–828

Hope A (1968) A simplified Monte Carlo significance test procedure. J R Stat Soc 30B:582–598

Jiang BZD, Zheng S (2013) Testing the independence of sets of large-dimensional variables. Sci China Math 56:135–147

Jiang JTD, Yang F (2012) Likelihood ratio tests for covariance matrices of high-dimensional normal distributions. J Stat Plann Inference 142:2241–2256

Jiang T, Yang F (2013) Central limit theorems for classical likelihood ratio tests for high-dimensional normal distributions. Ann Stat 41:2029–2074

Johnstone IM (2001) On the distribution of the largest eigenvalue in principal components analysis. Ann Stat 29:295–327

Johnstone IM (2006) High dimensional statistical inference and random matrices. Proc International Congress of Mathematicians

Kendall MG (1938) A new measure of rank correlation. Biometrika 30:81–93

Mao G (2018) Testing independence in high dimensions using Kendall’s Tau. Comput Stat Data Anal 117:128–137

Pitman E (1937) Significance tests which may be applied to samples from any population. R Stat Soc Suppl 4:119–130

Silva I, Assunção R (2009) Power of the sequential Monte Carlo test. Seq Anal 28:163–174

Silva I, Assunção R (2018) Truncated sequential Monte Carlo test with exact power. Braz J Probab Stat 32:215–238

Silva IR, Maboudou-Tchao EM, de Figueiredo WL (2018) Frequentist-Bayesian Monte Carlo test for mean vectors in high dimension. J Comput Appl Math 333:51–64

Spearman CE (1904) The proof and measurement of association between two things. Am J Psychol 15:72–101

Srivastava M, von Rosen T, von Rosen D (2008) Models with a Kronecker product covariance structure: estimation and testing. Math Methods Stat 17:357–370

Székely GJ, Rizzo ML (2013) The distance correlation t-test of independence in high dimension. J Multivar Anal 117:193–213

Timm N (2002) Applied multivariate analysis. Springer Verlang, New York

Tsiligkaridis T, Hero A III, Zhou S (2013) On convergence of Kronecker graphical lasso algorithms. IEEE Trans Signal Process 61(7):1743–1755

Yamada HMSNY, Nishiyama T (2017) Testing block-diagonal covariance structure for high-dimensional data under non-normality. J Multivar Anal 155:305–316

Yang Y, Pan G (2015) Independence test for high dimensional data based on regularized canonical correlation coefficients. Ann Stat 43:467–500

Yao S, Zhang X, Shao X (2018) Testing mutual independence in high dimension via distance covariance. J R Stat Soc B 80:455–480

Yata K, Aoshima M (2013) Correlation tests for high-dimensional data using extended cross-data-matrix methodology. J Multivar Anal 117:313–331

Yata K, Aoshima M (2016) High-dimensional inference on covariance structures via the extended cross-data-matrix methodology. J Multiva Anal 151:151–166

Acknowledgements

The first author has received support from Conselho Nacional de Desenvolvimento Científico e Tecnológico - CNPq, Brazil, grant #301391/2019-0, and from Fundação de Amparo à Pesquisa do Estado de Minas Gerais (FAPEMIG), Brazil. We are grateful to the Associate Editor and two anonymous referees for their helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The corresponding author’s research was funded by the Conselho Nacional de Desenvolvimento Científico e Tecnológico - CNPq, Brazil, grant #301391/2019-0.

Appendix

Appendix

Type I Error probabilities (\(\rho =0\)) of Ft, Ecdm and Dcor methods using \(\alpha =0.001\). The simulations were performed using the block-diagonal structure \((i^{\prime })\) for the intra-class covariances with \(r=0.5\). The settings were \(N= P/s\), with \(s= 1,\; 5,\; 10\), and \(P=50,\; 80,\; 100,\; 150,\; 200\). The estimates were based on \(M=2000\) data sets simulated for each configuration of P, N and s

Statistical power of Ft, Ecdm and Dcor methods with the block-diagonal structure under \(\rho =0.25\) using \(\alpha =0.001\). The simulations were performed using the block-diagonal structure \((i^{\prime })\) for the intra-class covariances with \(r=0.5\). The settings were \(N=P/s\), with \(s= 1,\; 5,\; 10\), and \(P=50,\; 80,\; 100,\; 150,\; 200\). The estimates were based on \(M=2000\) data sets simulated for each configuration of P, N and s

Statistical power of Ft, Ecdm and Dcor methods under \(\rho =0.1\) with the block-diagonal structure using \(\alpha =0.01\). The simulations were performed using the block-diagonal structure \((i^{\prime })\) for the intra-class covariances with \(r=0.5\). The settings were \(N=P/s\), with \(s= 1,\; 5,\; 10\), and \(P=50,\; 80,\; 100,\; 150,\; 200\). The estimates were based on \(M=2000\) data sets simulated for each configuration of P, N and s

Rights and permissions

About this article

Cite this article

Silva, I.R., Zhuang, Y. & Junior, J.C.A.d.S. Kronecker delta method for testing independence between two vectors in high-dimension. Stat Papers 63, 343–365 (2022). https://doi.org/10.1007/s00362-021-01238-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-021-01238-z